Anthropic simply launched a brand new mannequin referred to as Claude 3.7 Sonnet, and whereas I am all the time within the newest AI capabilities, it was the brand new “prolonged” mode that actually drew my eye. It jogged my memory of how OpenAI first debuted its o1 mannequin for ChatGPT. It supplied a means of accessing o1 with out leaving a window utilizing the ChatGPT 4o mannequin. You would sort “/purpose,” and the AI chatbot would use o1 as an alternative. It is superfluous now, although it nonetheless works on the app. Regardless, the deeper, extra structured reasoning promised by each made me wish to see how they’d do towards each other.

Claude 3.7’s Prolonged mode is designed to be a hybrid reasoning instrument, giving customers the choice to toggle between fast, conversational responses and in-depth, step-by-step problem-solving. It takes time to investigate your immediate earlier than delivering its reply. That makes it nice for math, coding, and logic. You possibly can even fine-tune the steadiness between velocity and depth, giving it a time restrict to consider its response. Anthropic positions this as a technique to make AI extra helpful for real-world functions that require layered, methodical problem-solving, versus simply surface-level responses.

Accessing Claude 3.7 requires a subscription to Claude Professional, so I made a decision to make use of the demonstration within the video under as my check as an alternative. To problem the Prolonged considering mode, Anthropic requested the AI to investigate and clarify the favored, classic likelihood puzzle referred to as the Monty Corridor Drawback. It’s a deceptively tough query that stumps lots of people, even those that think about themselves good at math.

The setup is straightforward: you are on a sport present and requested to select certainly one of three doorways. Behind one is a automobile; behind the others, goats. At a whim, Anthropic determined to go along with crabs as an alternative of goats, however the precept is similar. After you make your alternative, the host, who is aware of what’s behind every door, opens one of many remaining two to disclose a goat (or crab). Now you will have a alternative: stick along with your authentic decide or change to the final unopened door. Most individuals assume it doesn’t matter, however counterintuitively, switching truly offers you a 2/3 probability of successful, whereas sticking along with your first alternative leaves you with only a 1/3 likelihood.

Crabby Decisions

With Prolonged Pondering enabled, Claude 3.7 took a measured, virtually tutorial method to explaining the issue. As an alternative of simply stating the right reply, it rigorously laid out the underlying logic in a number of steps, emphasizing why the possibilities shift after the host reveals a crab. It didn’t simply clarify in dry math phrases, both. Claude ran by hypothetical eventualities, demonstrating how the possibilities performed out over repeated trials, making it a lot simpler to understand why switching is all the time the higher transfer. The response wasn’t rushed; it felt like having a professor stroll me by it in a gradual, deliberate method, guaranteeing I really understood why the frequent instinct was unsuitable.

ChatGPT o1 supplied simply a lot of a break down, and defined the difficulty properly. Actually, it defined it in a number of varieties and kinds. Together with the fundamental likelihood, it additionally went by sport idea, the narrative views, the psychological expertise, and even an financial breakdown. If something, it was just a little overwhelming.

Gameplay

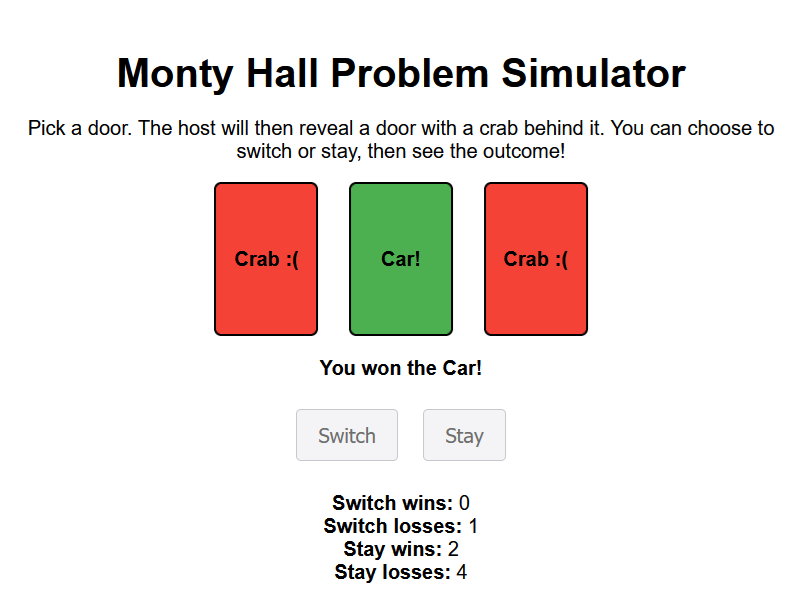

That is not all Claude’s Prolonged considering might do, although. As you may see within the video, Claude was even in a position to make a model of the Monty Corridor Drawback right into a sport you would play proper within the window. Trying the identical immediate with ChatGPT o1 did not do fairly the identical. As an alternative, ChatGPT wrote an HTML script for a simulation of the issue that I might save and open in my browser. It labored, as you may see under, however took a number of additional steps.

Whereas there are virtually definitely small variations in high quality relying on what sort of code or math you are engaged on, each Claude’s Prolonged considering and ChatGPT’s o1 mannequin provide stable, analytical approaches to logical issues. I can see the benefit of adjusting the time and depth of reasoning that Claude gives. That stated, until you are actually in a rush or demand an unusually heavy bit of study, ChatGPT does not take up an excessive amount of time and produces various content material from its pondering.

The power to render the issue as a simulation inside the chat is way more notable. It makes Claude really feel extra versatile and highly effective, even when the precise simulation seemingly makes use of very related code to the HTML written by ChatGPT.